You have just learnt the basics of a programming language at school or college or through an online course. You now know the basic components of a program. You are also able to solve some basic problems using small amounts of code.

But somehow when you are writing pieces of codes in professional capacity, you are always making multiple changes in your code and are constantly discussing things over long meetings. Maybe you are not as good a programmer as you thought you were?

I was faced with an exactly the same kind of problem a couple of years back, when I started my career as a software developer working on large code bases and developing pieces of software that would run in production and impact thousands of systems. Fortunately for me, I had the support of extremely patient peers and colleagues who were kind enough to spend some of their valuable time guiding me. These were people who had almost 15 to 20 years of experience writing programs that were efficient, easy to debug and easy to modify in face of frequent changes in requirements.

In this post I will list a few resources that were recommended by these seasoned programmers and why every programmer should have a look at them as well. Going through these resources surely changed the way I approached the problems and made me realize the immense knowledge that is still to be gained.

Here are some of the recommended readings for anyone who wants to program for a living:

- The Pragmatic Programmer by Andrew Hunt and David Thomas

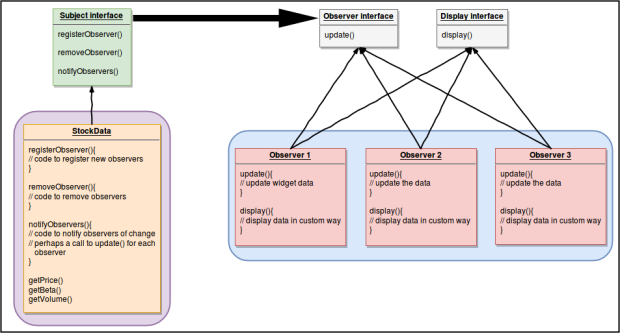

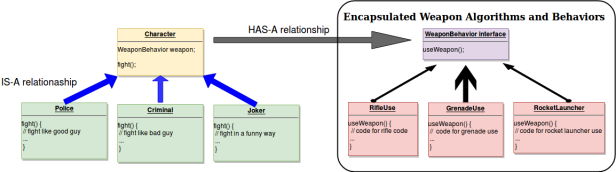

- Head First Design Patterns by Eric Freeman and Elisabeth Robson

-

Structure and Interpretation of Computer Programs by Gerald Jay Sussman and Hal Abelson

- Introduction to Algorithms by Cormen, Leiserson, Rivest and Stein

- Modern C++ Programming with Test-Driven Development by Jeff Langr

If you are also working with developing analytics solutions, using machine learning in your work and are looking to get a better understanding of the various algorithms that you are working with, then you should also have a look at these books:

- Machine Learning by Tom Mitchell – A good introduction to the basic concepts of Machine Learning. Best when studied in parallel to following the Machine Learning course by Andrew Ng. Recommended for beginners to advanced level learners.

- An Introduction to Statistical Learning by Gareth James, Daniela Witten, Trevor Hastie and Robert Tibshirani – A good introduction for anyone entering at a beginner/junior level as a Data Analyst or a Data Scientist. Provides really good introduction to the basic machine learning concepts as well as their code in R language. Recommended for beginner to medium level learners.

-

The Elements of Statistical Learning by Jerome H. Friedman, Robert Tibshirani, and Trevor Hastie – A really great book for understanding the concepts of machine learning and understanding the mathematical and statistical properties behind them. Recommended for medium to advanced level learners.

- Deep Learning by

- Data Science for Business by Foster Provost and Tom Fawcett – A great read for anyone at any level. It helps you really understand some aspects of the Data Science field which might not be very intuitive for a beginner or someone coming in from a purely computer science background.

-

Modeling the Internet and the Web by

The second list of books are not for light reading and require a sufficient amount of devotion. I am still reading some of them even after 2 years and going back to them multiple times for better understanding. However, they are all worth your time and will definitely reward you over the next couple of years as we work on more complex problems and design more sophisticated systems.

Good luck on your journey to becoming a better programmer 🙂

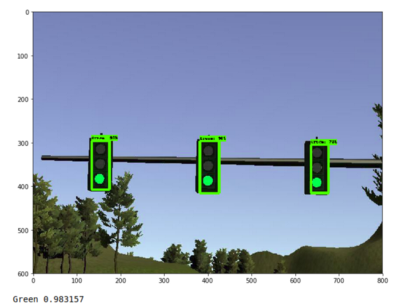

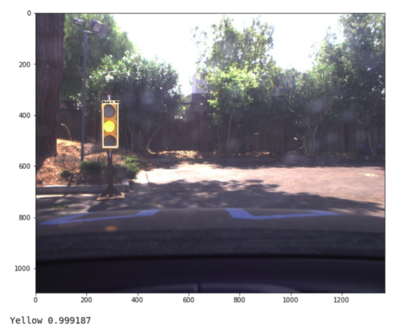

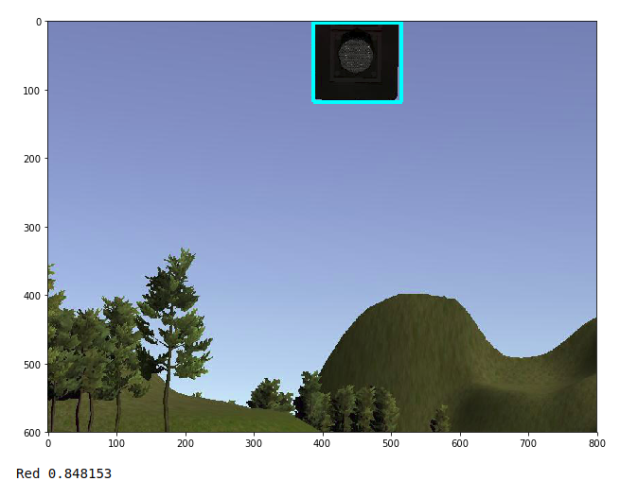

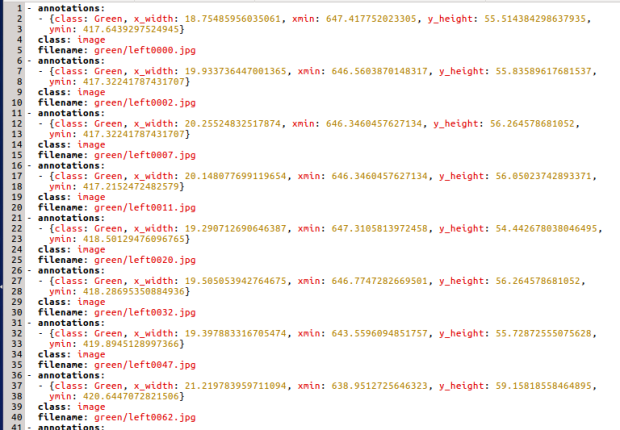

Data the real car’s camera captured from the track

Data the real car’s camera captured from the track Output after manual image annotations

Output after manual image annotations label_map

label_map