A few days ago my mentor gave me the task of reading an XML file and then creating a new XML file in a different format by using the data of the read XML file. Since I was given the full freedom of using any programming language, I decided to go with C as taking CS50x has now given me enough confidence to approach any programming dragon with my shining C sword and armor. However after a few hours, I realized that I was getting nowhere. So I decided to look into Python. It turns out that working with XML files is an extremely simple task using Python.

For my task I made use of the ‘xml.etree’ and ‘xml.minidom’.

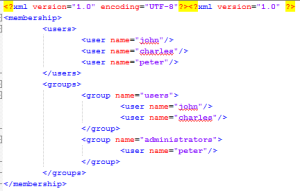

I will now give you a step by step guide on how to create a beautiful (pretty-print) XML file as shown below using python and also some tips on how to read an XML file.

Step 1: Importing the libraries.

import xml.dom.minidom

from xml.etree import ElementTree

from xml.etree.ElementTree import Element

from xml.etree.ElementTree import SubElement

Step 2: Creating the root element.

# <membership/>

membership = Element( 'membership' )

Step 3: Creating child for the root. I will create two children for the root in this example.

# <membership><users/>

users = SubElement( membership, 'users' )

# <membership><groups/>

groups = SubElement( membership, 'groups' )

Step 4: Creating nodes inside the children.

# <membership><users><user/>

SubElement( users, 'user', name='john' )

SubElement( users, 'user', name='charles' )

SubElement( users, 'user', name='peter' )

# <membership><groups><group><user/>

SubElement( group, 'user', name='john' )

SubElement( group, 'user', name='charles' )

# <membership><groups><group/>

group = SubElement( groups, 'group', name='administrators' )

# <membership><groups><group><user/>

SubElement( group, 'user', name='peter' )

Step 5: Converting to string and then pretty-print.

xmls = xml.dom.minidom.parseString(string)

pretty_xml_as_string = xmls.toprettyxml()

Step 6: Writing to a file.

output_file = open('membership.xml', 'w')

output_file.write('')

output_file.write(pretty_xml_as_string)

output_file.close()

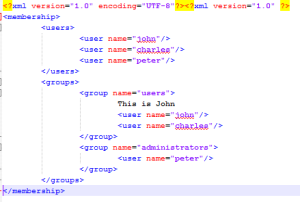

This will create a nice XML file for us. If you want to add a text node also just use the following command:

group.text = "This is John"

after any of the element or sub-element which is assigned to users, and it will ad a text-node to the file like below.

While reading an XML file we must proceed in an hierarchical manner.

from xml.etree import ElementTree

document = ElementTree.parse( 'membership.xml' )

document will have an object that is not exactly a node in the XML structure, but it provides a handful of functions to consume the contents of the element hierarchy parsed from the file. Which way you choose is largely a matter of taste and probably influenced by the task at hand. The following are examples:

users = document.find( 'users')

is equivalent to:

membership = document.getroot()

users = membership.find( 'users' )

Finding specific elements

XML is a hierarchical structure. Depending on what you do, you may want to enforce certain hierarchy of elements when consuming the contents of the file. For example, we know that the membership.xml file expects users to be defined like membership -> users -> user. You can quickly get all the user nodes by doing this:

for user in document.findall( 'users/user' ):

print user.attrib[ 'name' ]

Likewise, you can quickly get all the groups by doing this:

for group in document.findall( 'groups/group' ):

print group.attrib[ 'name' ]

Iterating elements

Even after finding specific elements or entry points in the hierarchy, you will normally need to iterate the children of a given node. This can be done like this:

for group in document.findall( 'groups/group' ):

print 'Group:', group.attrib[ 'name' ]

print 'Users:'

for node in group.getchildren():

if node.tag == 'user':

print '-', node.attrib[ 'name' ]

Other times, you may need to visit every single element in the hierarchy from any given starting point. There are two ways of doing it, one includes the starting element in the iteration, the other only its children. Subtle, but important difference, i.e.:

Iterate nodes including starting point:

users = document.find( 'users' )

for node in users.getiterator():

print node.tag, node.attrib, node.text, node.tail

Produces this output:

users {} None None

user {'name': 'john'} None None

user {'name': 'charles'} None None

user {'name': 'peter'} None None

Iterate only the children:

users = document.find( 'users' )

for node in users.getchildren():

print node.tag, node.attrib, node.text, node.tail

Produces this output:

user {'name': 'john'} None None

user {'name': 'charles'} None None

user {'name': 'peter'} None None